The Series

Future of Video Games and Metaverse Industries II

Future of Video Games and Metaverse Industries III

Nowadays, everyone has heard something about the concepts of Industry 4.0 and IoT (Internet of Things), regardless of whether they relate to the technology. According to these trends approaches, production stages will be fully automated (robotic) quickly, and all electronic devices, robots, etc., will be able to talk and communicate with each other around the globe. In a juicy instance, the refrigerator at Leo’s house in the UK and Juila’s refrigerator in Brazil will be able to talk to each other, share information and exchange ideas for the best storage conditions.

Actually, it is not possible to talk about a real innovation here. However, there is a great deal of integration and interoperability here. The network and infrastructure to be established for this are really exciting and a tremendous revolution. If we will be turned back to our main ideas, “Game & Metaverse,”.

While refrigerators at two different ends of the ocean exchange data with each other, there can’t be anything as foolish as video games or metaverses not being able to exchange data with each other, right?

— Alper Akalin

That data exchange cannot be done with current metaverse infrastructures and game engines. Yes, it’s silly, but that’s how it is. Any integration or communication between video games or metaverses cannot or is not established due o commercial concerns and technical constraints.

With new trends in the modern world and the Metaverse approach, many integrations will undoubtedly be established between all games, allowing them to work and communicate.

For example, players can easily switch from one game to another with a game character they have. In fact, since every game is a Metaverse in itself, data exchange and interoperability between metaverses (games) are crucial.

- If there will ever be a genuinely immersive Metaverse or video game industry in the future, it certainly can’t be in the traditional, Static Application Driven model.

- If there will ever be a genuinely immersive Metaverse in the future, it will undoubtedly be in the modern Dynamic & Data-Driven Model.

Otherwise, accessing Metaverses and games from anywhere will not be possible, and there will never be integration and interoperability between them. That is a metaverse killer and a game-breaker situation.

All future immersive Metaverses and certain level Video Games and their design and publishing platforms should have the following characteristics.

- Cloud-Native Platform approach

- Web Browser-Based – No App Required approach

- No-Code Designing & Development approach

- Data-Driven Infrastructure

- Dynamic Data Driven approach

- Dynamic Asset Loading approach

- Intelligent & Centralized Asset Management approach

- Dynamic Data-Driven 3D Model File Format

- Dynamic Data Driven 3D Engine

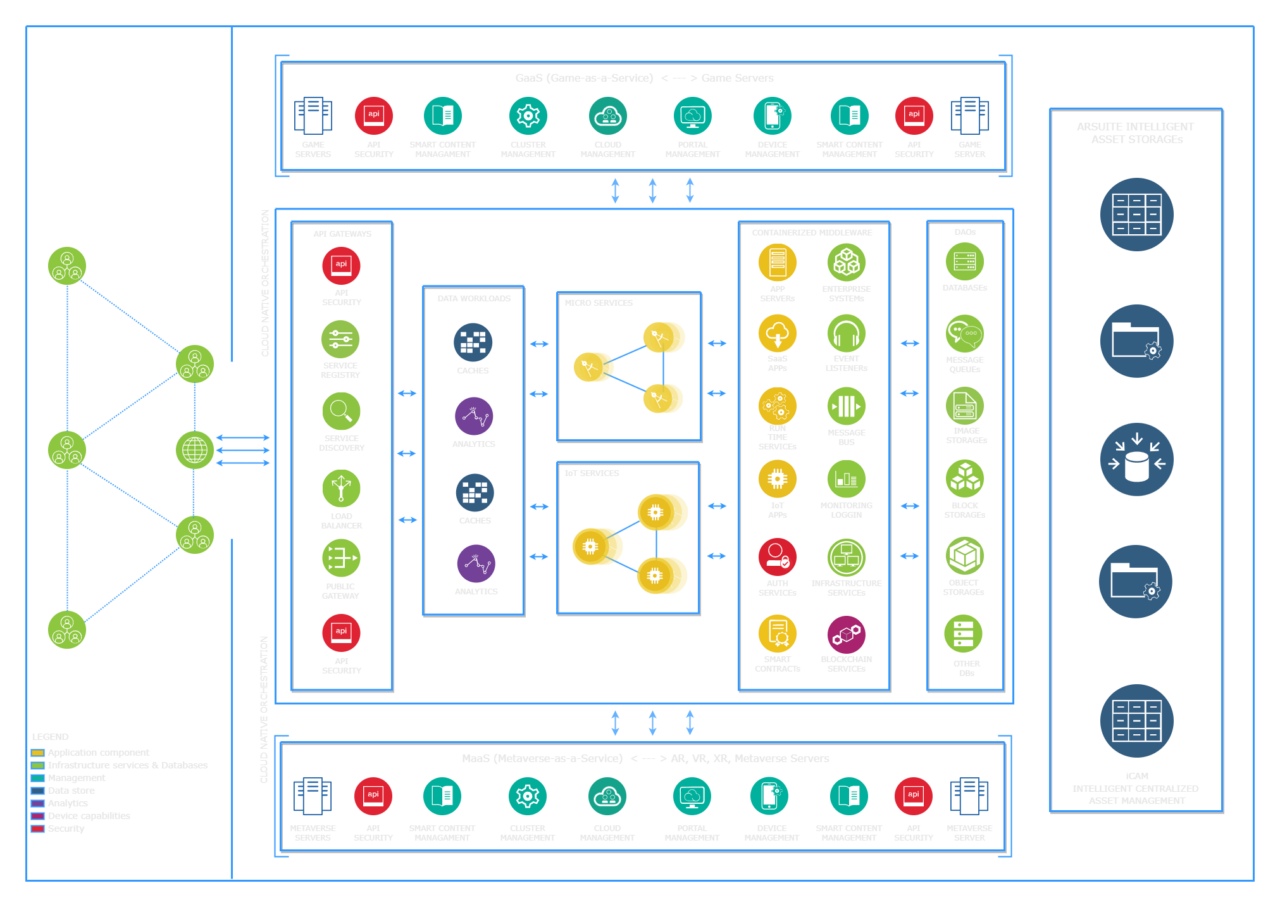

The Arsuite cloud infrastructure is summarized in the diagram above. This infrastructure was built in 2017, and the platform was fully activated in the second half of 2018. If you want to create a similar platform, you should definitely be cloud-native, and you can get inspired from this diagram if you wish. Of course, many features need to be but are not represented in this diagram due to commercial and space constraints.

Complete Cloud-Native Platform

95% of the game and metaverse design, development and publishing platforms, editors and products widely used worldwide are concrete/static desktop or mobile applications. So, you must download and use huge editors, IDEs, or tools to design, develop and publish. You must design, develop and publish everything on your own computers.

For example, when you use common and well-known game engines, you must download a vast game engine and editor for design. When you create something using these editors, you must build-export those scenes and produce small APPs. Managing and delivering these small APPs to users is complex and extra challenging.

Note: Most common and well-known game engines were not created to render AR, XR, VR, Metaverse or Web-Games scenes. Therefore, when you work with them, you must deal with many unnecessary codes or assets. Most importantly, those engines will never focus on what you do because they are game oriented.

Arsuite is currently the world’s first and only complete cloud-native 3D design & development platform. All engines and services belonging to Arsuite are accessed completely cloud-natively, and all Arsuite services run from all web browsers.

Web Browser-Based Solution – “Absolutely No-App Required”

No application download is required for any process, such as development or publishing on the Arsuite platform. Everything, every tool and every product can be accessed instantly from any web/mobile browser, anywhere, with no app required.

Complete No-Code Design Tools

For 3D, AR, XR, VR and Metaverse products to become widespread, their design and development processes should be easy and easy to access. In this context, including people who have yet to gain solid 3D design and software development experience is vital. That is where No-Code design tools come into play.

You have seen many advertisements and articles about No-code software development tools. You need to write code in the 30-60% range in all of these no-code tools. So a certain level of software development experience is required. Arsuite offers 100% no-code design tools. Plus, Arsuite also allows you to write custom scripts if you wish.

Data-Driven vs App-Driven Infrastructures

App-Driven Infrastructure – “The Others”

Although many global companies or platforms claim otherwise, they offer a static App-Driven infrastructure. All information, 3D models, materials and textures of a scene created with the App-Driven approach are saved in a small application. In other words, each 3D, AR, XR, VR or Metaverse scene created in the App-Driven model is designed, developed and published separately as a separate APP.

The user or designer who downloads this app can only do as much as the predefined features allow in the app. When a new model, material or texture needs to be added to this scene, the scene must be recreated entirely or updated as a new application.

If you want to create (AR + XR + Metaverse) scene for a single 3D model, you must create at least 3 separate APPs for each scene from that single 3D model. If you want to do additional platform-based optimization (PC + Mac + iOS + Android), you may have to create extra APPs. In other words, 3 x 4 = 12 different APPs should be created. That makes it more challenging to develop, update, publish, use, or analyze the data generated due to use.

Suppose there is an e-commerce platform where there are/will be 1000s of products or scenes. Creating, publishing and updating 1000s of APPs for 1000s of products on this platform would not be a wise investment.

When there is a bug on the platform where these scene APPs were created, or an update is made, these scene APPs will likely need to be recreated or updated one by one to work correctly. As a result;

- When a Platform software update is required

- When a Designer update or change is needed on the scene

- When a User update or change is needed on the scene

When you face at least one of these circumstances, you must decide. We know you wouldn’t prefer a scenario where 12 possible APP updates might be required. But there are no other options in the App-Driven approach. So, you have to re-create the whole APPs again.

Data-Driven Infrastructure – “The Arsuite”

The Data-Drive Approach saves all 3D scene information, 3D models, materials and textures as distributed datasets. When the user wants to examine a scene, all data about that scene is instantly collected from these distributed data sets and combined. Then the scene sends to the user.

As the inventor of the data-driven approach, Arsuite never creates separate APPs to store each 3D, AR, XR, VR or Metaverse scene information and assets. So, Arsuite never creates, publishes, or maintains repetitive apps like in the App-Driven model.

Arsuite has been designed in accordance with future data-driven / big data and AI architectures. Arsuite stores all 3D models, materials, assets or textures of the 3D, AR, XR, VR or Metaverse scenes in its advanced and distributed databases. In fact, we can consider the entire Arsuite Platform as a unified ultrasmart AI-Artificial Intelligence.

Probably one of the most efficient and brilliant features of Arsuite AI, it decides and sends the amount of data to the user, depending on the user’s device and network connection. This superior intelligence gives Arsuite incredible speed and flexibility. Arsuite treats everything as a data stream and manages it from a single centre.

For example, depending on the user’s device resources and network connection, Arsuite provides the best user experience; It optimizes the data to be sent by deciding on many parameters, such as the texture quality to be sent to the user.

Data-Driven vs App-Driven Approaches

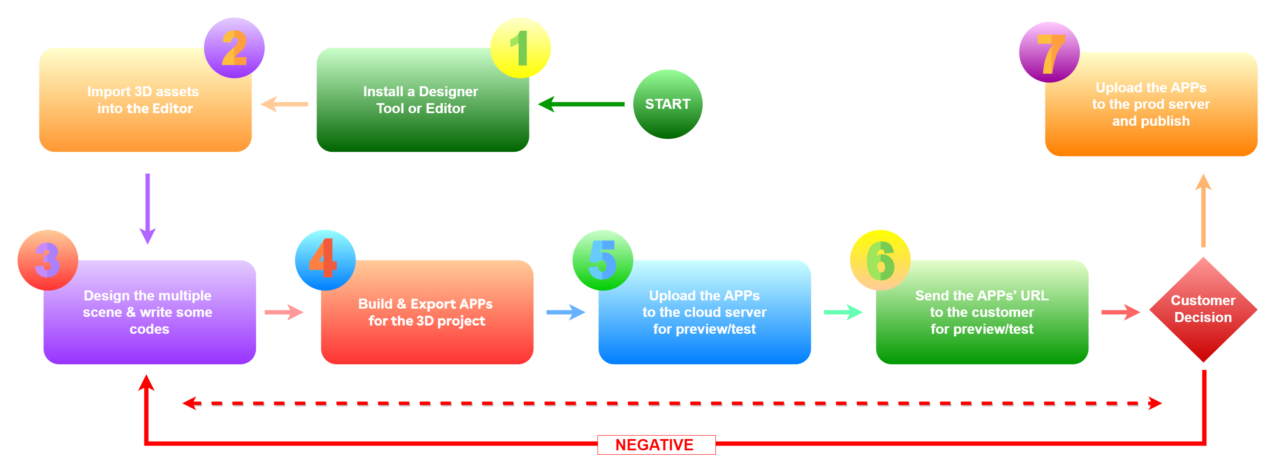

App-Driven Approach 3D, AR, XR, VR & Metaverse Development Flow

You have to create and publish an APP even when a simple Augmented Reality scene is created using most solutions in the global market. So, each time a new 3D, AR, XR, VR or Metaverse scene is created, the above loop must be executed, and further separated APPs must be created. When an update is required in one of these scenes, the related APP must be recreated using the flow here.

If there will be an attractive, interactive and immersive Metaverse in the future, it certainly cannot be in the Application Driven Model. We need a brand new Dynamic and Data-Driven Model.

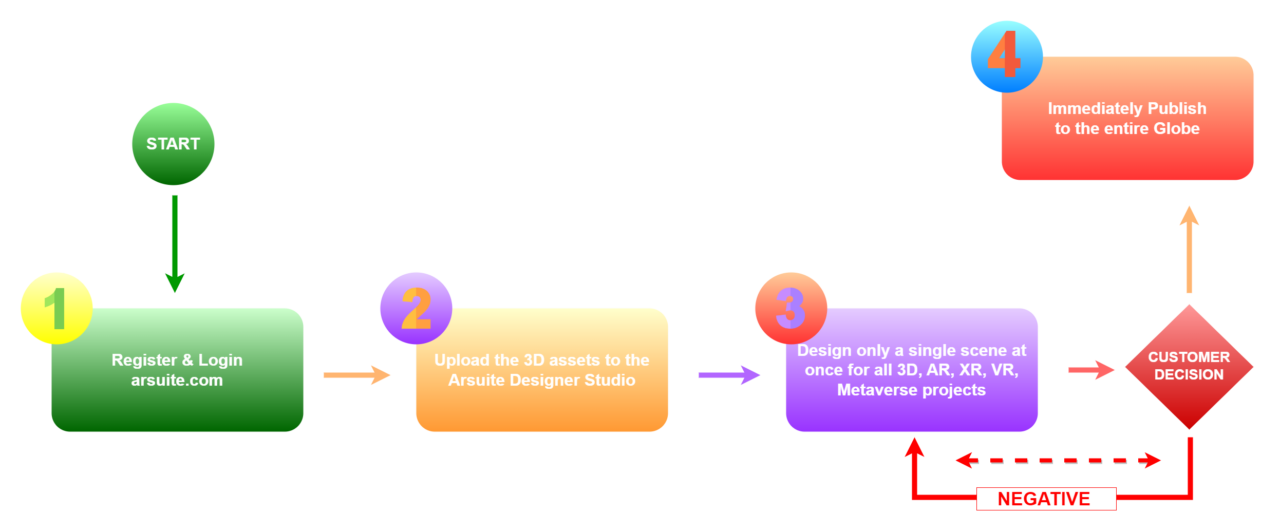

Dynamic Data-Driven Aproach 3D, AR, XR, VR & Metaverse Development Flow

Everything in Arsuite runs in a highly dynamic and data-driven architecture. That allows Arsuite to dynamically load all a scene’s assets into the scene in real time.

As soon as the user starts to open a 3D scene from Arsuite on any device, the assets of that scene are loaded and rendered. Before the loading and rendering process begins, that scene is not entirely stored or saved anywhere as a whole, as data, a dataset, or an app. In other words, there is no pre-baked/pre-created scene, dataset, component, APP, etc., at any design, development or publishing stage of Arsuite.

When any scene call request is received, Arsuite Immersive Intelligence collects the information and datasets of that scene from servers and databases in different locations, combines them and sends them to the user. That scene is created in real real-time on the user’s machine.

Dynamic Asset Loading

Dynamic Asset Loading is the ability to add a new feature to any 3D scene in runtime, or instantly change/update any existing feature. For example, a new 3D model should be added to the scene at any T moment, or the lighting etc., properties of the scene should be changed instantly.

In order to load assets dynamically, the entire platform infrastructure must be suitable or prepared for it. Otherwise, dynamic asset loading will not work.

Real-Time Rendering & Dynamic Asset Loading

Warning, Runtime/Real-Time rendering and Dynamic Asset Loading are entirely different from each other. Please don’t mix them up.

Real-time rendering is a method of rendering a scene immediately at the runtime, not pre-baked or pre-rendered. Real-time rendering doesn’t care much about how the scene is created. It renders the existing scene and presents it to the user.

Static Asset Loading

Static Asset Loading determines what happens in the scene to be rendered. These assets that have been pre-baked/built-in advance cannot be changed. If you want to change it, you have to recreate the scene.

Dynamic Asset Loading

Dynamic Asset Loading determines what will happen in the scene to be rendered and allows these assets to be changed “real-time” at any T instant.

In Dynamic Asset Loading, there are only some parameters, data, datasets, materials, assets, and gas and dust clouds of a scene. This information is collected, processed, rendered and presented to the user in real-time. Since everything is a dynamic data block or data stream, it is delivered to the user very quickly, and the user is allowed to make changes in the runtime at any T moment.

As you noticed, the App-Driven approach has no actual Dynamic Asset Loading because that 3D scene builds or exports at the compile/design time.

Most platforms in the global market work within static asset-loading principles, and very few claims they support the dynamic asset-loading architecture. Although they partially support dynamic asset loading, they are not comparable to Arsuite Dynamic Asset Loading technologies.

Those platforms can make minimal changes on the scene in real-time or runtime with only one single method. “Adding some Parametric Features”, They parametrically develop some features and materials on the stage and bake/build the 3D scene. Depending on these parameters, they create a dynamic effect in the scene. However, everything still has to be defined in the app of that scene. When a request comes outside these parameters, they cannot process the incoming new request, so the dynamic effect is lost. In other words, in this situation, it is impossible to talk about real-time dynamic asset loading in real terms.

In Arsuite, all assets are dynamically loaded into the scene in absolute real-time. In other words, as soon as the user starts to open a 3D scene from Arsuite on any device, the assets of that scene are loaded and rendered at runtime. The scene as a whole is never saved anywhere before the scene rendering/rendering starts. So there is no concrete scene. The scene is stored as some data sets in the distributed data architecture within the Arsuite platform.

When the scene call request comes to Arsuite, Arsuite Immersive Intelligence collects the entire data of that scene from servers and databases in different locations, combines it and sends it to the user. The scene is created and displayed in real-time on the user’s device.

If there will be some attractive, interactive and immersive Metaverse in the future, it will undoubtedly be in the modern Dynamic Data-Driven Model. Otherwise, accessing Metaverses and Games from everywhere will not be possible, and any integration and interoperability between them will never appear. That is a metaverse killer and a game-breaker situation.

Arsuite Smart Asset Manager – aSAM

SAM is the world’s first intelligent CMS in the complex 3D design and development space. It is an ingenious centralized content management system that manages all models, materials and textures required for complex 3D, AR, XR, VR and Metaverse design and development.

For example, the most crucial component in 3D scenes is 3D models. One of the most important features or advantages of aSAM is that once a 3D model has been uploaded to the cloud, it can be used in a million different scenes without needing a reload. So there is no need for reloading. That saves both storage and money.

aSAM is one of the most critical AI APIs of the Arsuite Dynamic Data Driven Platform. SAM has miraculous results for data and file streaming. A modern, dynamic, data-driven, cloud-native “Metaverse Builder” platform can only be established and operated with a well-designed, developed, fast-running, intelligent content management system.

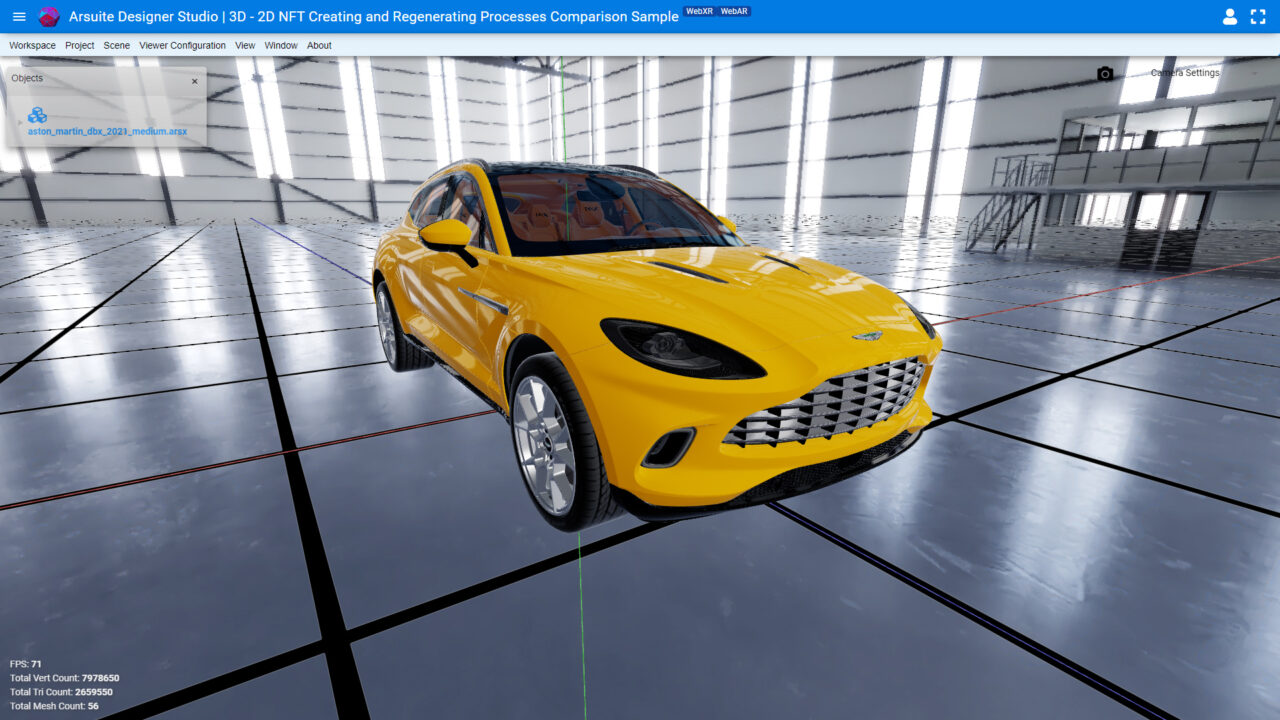

Click for the Astronaut sample. Please pay attention to the differentiations between WebXR and WebAR reflections on the Astronaut’s helmet.

Dynamic Data-Driven 3D Model File Format

If you have a claim to create a brand new dynamic and data-driven model, you may need help completing it with existing static 3D model formats. After all, existing formats are designed for pre-rendered and baked systems. In this case, creating a modern 3D file format is best to support data-driven, data-stream-driven, dynamic-asset loading.

For this purpose, Arsuite has created the ARSX file format with a very modern Dynamic Data Driven approach. The result of Arsuite Immersive Intelligent R&D, ARSX, is the leanest and most dynamic 3D file format.

Dynamic Data-Driven 3D & Web-Game Engine

Arsuite Engine is our 3D rendering/loading and 3D web game engine that brings out the best features of ARSX. Let’s take the following test scenario as an example.

- 1.5 Million polygons 3D model

- WebGL, Version 2.0

- Arsuite Designer Studio and Engine, Version 0.0.5 Beta

- Intel i/-4710HQ

- 16 GB Ram

- Nvidia GeForce GTX860M 2GB

Arsuite Engine + ARSX is at least 30 times faster than .fbx-based engines, at least 320 times faster than .obj-based engines, at least 10 times faster than .gltf-based engines, and at least 14 times faster than .usdz-based engines. The benchmark was made under the above conditions.

As the model size or the number of polygons in the model increases, the speed difference between Arsuite and other engines increases exponentially.

- A model with 1.5 million polygons is rendered in 1 second with .arsx + arsx engine, 30 seconds with .fbx + x fbx engine, and 320 seconds with .obj + x obj engine.

- A model with 3 million polygons is rendered in 1.1 seconds with the .arsx + arsx engine, 90 seconds on average with the .fbx + x fbx engine, and 1100 seconds on average with the .obj + x obj engine. Here, the arsx + arsx engine only increased by 10%, while the other engines increased their render time by 3 to 4 times.

- Tests are performed on devices with low resources.

Conclusion

In the App-Driven approach, you must create an APP for each 3D scene. That means creating millions of APPs for millions of 3D scenes. In such a case, the amount of data, workload and resource consumption will be incredible. Today, many platforms or companies need better approaches to correctly design and manage massive 2D data. Imagine that data in 3D.

Considering that everything will be 3D and Immersive in the future, it will not be able to carry the data produced irresponsibly and foolishly in this way, even if 6G comes before 5G.

The articles below show the daily uploaded data size to the internet in current 2D standards and Arsuite’s perspective on 5G/6G technologies.

The waste of resources created here will bring a lot of unnecessary costs to the companies. It will cause a lot of damage to the environment due to excessive resource consumption.

How Much Data Is Created Every Day? [27 Staggering Stats]

How Much Data is Created on the Internet Each Day?

What is The Magic? 5G, Next-Gen GPUs – CPUs or Something Unknown?

The Series

Future of Video Games and Metaverse Industries II

Future of Video Games and Metaverse Industries III