The Series

Future of Video Games and Metaverse Industries

Future of Video Games and Metaverse Industries III

As you know, interoperability and transferability have become trendy and mandatory concepts, with Metaverse, AR, VR, and XR technologies becoming widespread or widely spoken. However, the formation of Metaverse, AR, VR, and XR technologies around the game development ecosystem with very wrong strategies slows the development of these sectors. The GameDev ecosystem is a closed system that is introverted and only interested in the game it has developed. In other words, the team that develops any game focuses only on that game. They do not care about the game’s communication or integration with the outside world.

In essence, each Game, AR, VR, and XR application is a Metaverse. They all are virtual universes created by people’s imagination in the digital world. Each of these apps can form more crowded universes, “Omniverses”, by merging or working in a smooth integration with each other, as they are a universe in themselves. In other words, Metaverse is not a new technological concept but should be considered as an upper concept that better describes or covers existing technologies.

With the concepts of Metaverse or Omniverse, the capabilities of the 3D and Game world, such as cloud-driven, interoperability and transferability, began to be questioned. In fact, cloud-native and fully communicating systems are new and annoying topics in the game world.

We are not talking about a simple connection with communication or integration; we are talking about robust and smooth connectivities in the form of game asset transfer, that you know. Leaving aside artistic concerns, we’re talking about playing in game Y with a character from game X and playing in all your favourite games with an avatar/character you created in Metaverse A.

Strong objections arise from the GameDev ecosystem regarding such overbusy communication and data transfer. Many game designers or developers claim that interoperability between games, asset transfer and advanced integration is impossible. We can divide this group into two main groups, which reject interoperability and transferability.

- Those who say it is absolutely impossible. And we can divide them into two.

- In fact, most of them are moaning minnies, those who say; please don’t cause us any new problems with the new concepts.

- Those who say that you cannot play with a character from the X game in the Y game because the dynamics of the X and Y games are very different from each other.

- Those who say that this requires advanced connection speeds such as 5G.

- These friends throw the ball into the out, saying the circumstances are unavailable.

- We want it too, but those who say it is impossible with the current connection speeds and server infrastructures because the game data is too large.

- Even if you make a new invention, please don’t mess with us.

When these objections are impartial and examined from a hawk-eye perspective, it will be seen that the matter is all about “data”. The foundation of both objections is how we process or manage “data”.

First, let’s start with 5G and see that data size does not prevent interoperability and transferability in 4G/4.5G. Since 5G is all about internet connection, it deals with network data from a slightly higher level. For instance, 5G is concerned with optimizing data entering the open network for data transfer; it is not concerned with data exchange details and optimization between games or applications. In short, it says, “Please send me optimized data!”

5G

We strongly recommend that you look at our article below regarding some of the terms we will use here and their usage.

- In this article, we will examine the problems in the 3D and 3D game worlds, which is our area of interest. But you can apply these reviews to any other industry.

- The article you are reviewing is not for those who do not trust themselves or their technology and are waiting for a Messiah. That saviour will never come.

- This article is for open-minded minds only. You are loved.<3.

Let’s take a brief historical tour of how marketers, rather than technology, drive the market.

At the time of this interview, our big dream was to download an mp3 while connecting to the internet via dial-up. As asked in the discussion, what was the need for the internet when there was TV/Radio?

We met with ADSL/VDSL technologies in the early 2000s. And service providers or tech leaders heavily propagated that this new technology is the Messiah. According to them, once we started using these technologies, all our connection problems would be solved, and the world would become heaven. Does this narrative sound familiar?

There are some theorems in physics called the theory of everything. These theories try to explain and unify the quantum and classical worlds in a single equation.

Today, many service providers are trying to sell us 5G as the solution/theory of everything. For example, they say that the delay in intercontinental data transmission will decrease to almost zero so that everything can be transmitted much more extensively, with higher quality and faster. But is it really so?

10% correct, 90% incorrect.

10%

In a mission-critical system like Davinci Surgical, which we reviewed in the article we linked above, 5G will be a saviour or Messiah. But to say that 5G is the theory of everything by generalizing it will really piss off physicists.

90%

First of all, the daily life of an ordinary person goes by a little on social media, watching a video or playing a game. They live a simple life far away from important work/transactions. For this 99.5% community, the low latency feature, which is the biggest miracle of 5G, means nothing. However, more than this is needed to explain 90%. So let’s first explain 90% and then talk about its reflection on the 3D and gaming industry.

Anyone who reads this article has seen a superhero movie at least once. The biggest lesson in superhero movies is the adage that “with great power comes great responsibility”. So as your power increases, your responsibility increases.

Our internet journey, which started with 56k Dial-ups, reached GBit. Our journey, which began with a few MB downloads per hour, has increased to over 10 GB downloads per hour. So when we say mp3, mp4, and HD, we are talking about today’s 4K and 8K video streaming.

From this, it is understood that the amount of data we produce has increased as our internet connection speed/power has increased. (power = responsibility <=> speed = data)

- At this link, you can see how much data some platforms send to a user per hour.

- You can see the disk sizes of some files at this link.

- You can see the sizes that some files can reach at this link.

- You can find information about the data produced daily at this link.

- You can find information about the data produced in one day on the internet at this link.

As we transitioned from 56K dial-up to fibre optic cables, we also exponentially increased the amount of data we generate and share. We have increased it so much that nowadays many of us complain about the lack of internet connection, the screen freezing while watching movies or playing games.

Why have connection technologies introduced as a miracle or saviour 25 years ago become inadequate today? Let me ask you again, leaving you to calculate the speed difference between 56K and 10 Gbit. How did today’s internet connection speeds become insufficient?

If you have looked at the video file sizes and stream speeds in the links above, a 4K video disk takes up 20 GB on average, and an 8K video disk takes up 36 GB. On the other hand, Youtube says it can stream 16GB per hour for a 4K video. The remarkable point is that YouTube can stream 4K to 100 million users without user restrictions. What can YouTube do that others can’t?

What we want to say briefly so far is that while the connection speeds increase, the data size we produce does not remain constant. In fact, the growth rate in the amount of data we produce is much higher than the mentioned connection speeds. Everyone is talking about the bandwidth that will come with 5G, but when that day comes, no one talks about the amount of data that humanity will produce and the data optimizations required for the amount of data.

Note: We are not concerned with RAW 4K/8K video sizes. Please take the trouble to optimize/encode it and upload it to the internet or save it. Finding a disk to store the videos you have recorded as 4K/8K raw data is a problem, and uploading them to the internet is a huge problem. Don’t try!

Can 5G, the saviour of tomorrow, stay out of this cycle?

What is The Magic? 5G, Next-Gen GPUs – CPUs or Something Unknown?

We believe we have solved the 5G related issues in the above article, but since it is closely related to the subject of this article, we examined it from a slightly different angle. A little introduction to data transfer rates was essential, especially for data streaming and platform interoperability. Many people say it is impossible with today’s internet and technologies for the topics we will cover in the rest of the article, but we will prove it is possible by using every single piece of data we have shared.

- The degree of brilliance of the future of the gaming and Metaverse industries will depend on the methods and technologies of data sharing and transfer. (interoperability)

- We can consider Metaverses as sets of games or interactive platforms that communicate with each other and can work together.

- Since every game is actually a metaverse, we can only use the term game industry from now on.

Metaverses are not new. Metaverses are a massive cluster of games or platforms which has very robust interoperability.

Alper Akalin

Now let’s take a quick look at the statistics of today’s popular games.

- The games in the link below start from 77GB to 250 GB.

- The link below shows that the Call Of Duty series’ average game time is 4-9.5 hours.

Black Ops Cold War PC Launch Trailer & Specs

Black Ops Cold War PC Launch Trailer & Specs

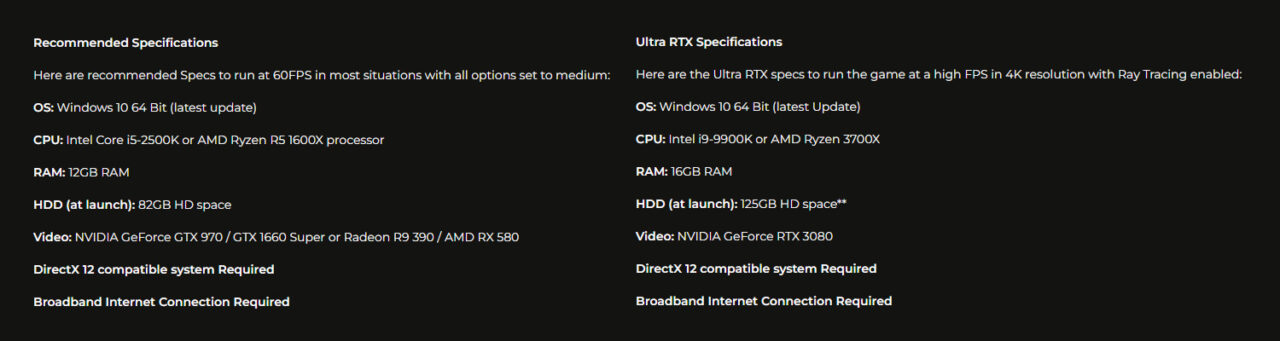

This link shows that BlackOps needs a minimum of 32GB and a maximum of 125GB disk for PCs. As can be understood from this last link and image, different disk space is needed for various game configurations.

Black Ops

- 4-9.5 hours

- 32-125GB disk space

The entire game can be streamed in 125/16 = 7.8 hours with max disk space at YouTube streaming speed. Considering that the maximum game time is 9.5 hours, this game can be downloaded and played simultaneously with today’s internet connections.

With today’s network infrastructure, while some platforms can deliver 16GB of data per hour to 100 million people without interruption, extremely rich and developed game companies that say they produce very advanced and high-quality games cannot deliver their games to us.

There is a big mistake somewhere, isn’t it?

So far, we think we should have made it clear that not streaming the current games has nothing to do with the data size. In other words, our existing network infrastructures can stream all of today’s games in a cloud-native, regardless of file size. The main problem is not a lack of file size and network, so games must be designed and developed as cloud-native compatible.

The First Mistake

Let’s leave the courtesy aside and dive hard into the subject. Several wrong approaches have turned into cancer in the gaming world. In the GameDev process, the incorrect positioning of the resources is the biggest problem. Let’s be clear; we are not scapegoating game developers or widely used GameDev platforms below. We all have a certain degree of respect and admiration for them.

- 99% of game developers and designers worldwide are not software developers in the engineering sense.

- They can be very talented and experienced people or high-quality technical artists. However, they are not software developers in the engineering sense.

- Since they write low-level code with low-code game editors, they avoid deep engineering-level coding and software architectures.

- Many never get into the actual engineering part of game or software development.

- They live far away from issues such as data, 3D data, web service, microservice, cloud computing and massive data streaming.

- There are many successful game engine platforms worldwide, and game developers are working on these platforms. Since these proven platforms and communities were founded 20-25+ years ago, they are built on the needs and technologies of that day.

- Working opportunities in today’s cloud-native architectures are minimal. Most of the time, they cannot work.

- Since they are extensive platforms, they do not dare to meet today’s cloud-native needs by making radical changes.

- Creating truly cloud-native interoperable and transportable games or products with these platforms and human resources is almost impossible.

- Products/games also have many limitations—for example, 100 users support simultaneously.

- Since devices with very high hardware are targeted, they face serious problems, especially on the mobile side.

The Second Mistake

At the beginning of the article, we said that Game/AR/VR/XR/Metaverse is the same thing, but it may differ due to you and the place of use. However, trying to create AR/VR/XR/Metaverse developers from game developers or designers is a vital mistake. That is what we call “add insult to injury”.

- Many investors or prominent companies are enthusiastically entering the AR/VR/XR/Metaverse market.

- Their first action there is a very successful game developer/designer; let’s recruit him/her and let’s put him/her in charge of our AR/VR/XR company or team. Superrr…

- Game developers with artistic or artistic game experience may join the team but should not manage software development.

- If you put people who don’t know about data, 3D data, streaming etc., in terms of engineering, they will make excuses instead of producing something. Like;

- It’s impossible for us to do this without 5G.

- Mobile devices are too inadequate for 3D operations.

- We can only work in low-poly on mobile devices.

At this point, let’s unite the Game/AR/VR/XR/Metaverse worlds and continue. Although they have some differences of their own, the quality of processing and management of 3D data will affect all of them similarly since they all consist of 3D models, 3D assets, 3D textures or 3D materials, that is, 3D data. Let’s give a little spoiler here.

There is no difference between 3D data and data at the atomic level. When we can understand, interpret and process 3D data correctly, 95% of the problems we complain about today will be solved automatically. For example, while the size of a 3D scene to be created with 3M polygon + 4K Skyobx + 4K textures exceeds 1GB on well-known platforms, it remains at 0.095GB in Arsuite. Which one will be easier to “stream + load + render” of 1GB or 0.095GB scene? There is no 5G, new GPUs, or new CPUs, just a well-designed algorithm + platform. Let’s not underestimate the platform; the platform itself is an advanced AI. 🙂

The Third Mistake

Monolith Architecture

3D Models, Assets, Materials or Environments, etc.

Today’s widely used game engines and development platforms emerged long before the cloud-native and mobile revolution. At that time, the best solution was to develop the games as a single piece, load them into tools such as CDs/DVDs, and sell them. No one has any objection to this.

We don’t criticize any of the existing platforms. However, in today’s 3D/Game technologies, everything is nested in a tree architecture. For example, consider a dungeon with an extensive map in a game. Let’s imagine that there are multiple rooms in the dungeon, depending on the level of the map.

In a game scene like the image below, chairs, tables, and rooms are all 3D models. These chairs are now a sub-model of rooms and an essential part of the rooms. In other words, they are integrated with the room after bake/build/export. Although there are some dynamic situations, these rooms are created in a way that cannot be changed at the export/build time of the game. If changes are to be made in the rooms, the changes to be made should be determined from the beginning. Otherwise, the integrity of the game will be broken. For example, when you want to add a new room or asset to a room, you should run the whole bake/build/export process again. On the cross side, the player must update the entire game app to get the new update.

Depending on the game/metaverse size, things get more complicated as 3D models, assets, and materials increase and game functions grow. There are such methods as Separation of Concern or Dependency Injection. I am not talking about SoC or DI in terms of software. I’m talking about a model or asset-level SoC or DI. At Arsuite, we call this Dynamic Asset Loading.

Let’s clarify what we have discussed so far with numbers.

- Let’s assume that the size of the assets in each room is 1GB on average, and the total size of rooms is 11 GB.

- In this dungeon, the player can visit all the rooms one by one and complete all the tasks.

- In this dungeon, the player can complete most tasks by entering many rooms.

- In this dungeon, the player can complete only necessary tasks by following the shortest path to the exit.

- We can multiply these scenarios and enlarge the map.

In today’s games, regardless of the scenarios, you need to install all game assets, such as 3D models/textures/materials etc., in this dungeon “map” to your machine. For example, even if a player leaves the dungeon immediately following the 1-2-11 path, he/she has to load the remaining eight rooms and everything in it to his/her device.

The player who has loaded 11 rooms can quickly exit the dungeon by choosing the shortest route. In this case, the game engine/app will render only the fields in the player’s path. In other words, the game engine/app will not load/render unused areas or bring additional load to the player’s system. It’s perfect so far. The problem starts from this point.

The player who leaves the dungeon by following the 1-2-11 route will see only three rooms. In other words, uploading a 3 GB asset to the machine will be sufficient. However, due to today’s game engines/apps architecture, you must load/upload all 11 GB of data to your device. You may say that 11 GB of data is unimportant, and you question why we are so stuck here.

In a game with massive maps, 4K/8K materials, and models with 10 million+ polygons, the disc sizes can increase to 40-50 GB. As a result of old-school approaches, the total length of the games reaches 250 GB disk sizes. Even these numbers are insignificant considering today’s discs. So where, when and how important are these numbers?

- What would happen if robust optimizations were made in-game assets and architecture? Did you think? We gave a small example of 1 GB and 0.095 GB above.

- Regarding engineering, game development and technical artistry are really different things.

The Forth Mistake

Server Side Rendering “Multiplayer”

Things get more complicated in multiplayer games, right? As we all know, in multiplayer games, a player makes a move, sends this action to the game server, and the game server processes the movement and transmits it to the other player. Like, the server says player A punched you at point XYZ with intensity G.

It will be challenging to talk about a healthy server-client relationship when millions of unnecessary data are circulating in a game created in a monolith architecture. The server will have to process unnecessary data while providing robust communication between the two players. Considering that the data of thousands of players are processed on a server, it will be easier to imagine the size of the event.

Today, massive games and all assets belonging to these games are placed in an unchangeable, unbreakable box as in the video and sold to us in fancy packages. Yes, many games require an internet connection and get updates occasionally or add new levels. However, those operations are performed with some primitive approaches. It is impossible to talk about a dynamic asset loading in the complete sense of the transactions made here.

The root of our problems is our strategies for processing, storing and managing data. Since everything is built on mobility and modularity today, we must find ways to develop games suitable for dynamic distributed data and streaming architecture instead of monolith game apps.

The Rise of Modularity and Cloud Computing – Streaming

In the days when this article was written, the sale of Activision Blizzard, one of the world’s largest game manufacturers, to Microsoft was cancelled by the UK/CMA because it could establish a monopoly in cloud gaming.

Activision Blizzard’s CEO made a statement about the CMA decision, claiming that cloud gaming is unimportant today. Wouldn’t we expect a more futuristic vision from the CEO of a company acquired by Microsoft with a value of $80 billion? But there seems to be a higher vision at CMA, a government agency. Nobody believed that, did they?

In We think there is a purely commercial concern behind this rhetoric. So the problem is not in cloud gaming or lack of vision. The problem is only in blocking the sale. With Cloud-Gaming, the discourse is just an argument in the case. As someone who closely follows the acquisition and Microsoft’s interest/statements, We believe severe investments in cloud gaming will begin to come one after another soon. Microsoft’s primary purpose was cloud gaming. So how? How can Cloud-Gaming rise? The big question.

Cloud-Gaming can only rise on modularity. It can only rise on modularity and dynamic-data-driven architectures, not on 5G, 6G or next-generation CPU-GPUs. Suppose we do not organize game assets, data architecture and apps according to modern technologies and build the data architecture correctly. In that case, the power of the technologies we will have in the future will be meaningless. The data we produce is growing faster than the technologies. Be sure unoptimized data will clog the system regardless of 5G/6G/7G.

In the short tour we took above, we saw that the data we produced increased exponentially with the infrastructure and devices we developed. Future technologies will have no meaning if we do not correctly build, process, classify and manage this data.

As Arsuite, we are open and eager to all new technologies, but today’s device technologies and internet connections are sufficient. Our duty is to bring together the games we will develop with suitable and practical algorithms and technologies.

If you have come this far, you have been informed in detail about the approach problems in the video game and metaverse world. Since the story of each game or company is different, we have yet to make suggestions as to what you should do. We drew a broad framework in the first article of the series. That framework will help you. However, in our next article, we will explain what we do at Arsuite and how we find solutions to the problems listed here. Examining this series of articles can help you to determine a roadmap according to your needs.

The Series

Future of Video Games and Metaverse Industries

Future of Video Games and Metaverse Industries III