Magic is just a word for all the things that haven’t yet discovered. We don’t speculate on the magic. We create it!

— Alper Akalin

The article in the link below can provide helpful information about the birth of Arsuite and how to create a next-generation technology platform.

In this article, we will not criticize any technology widely used today. We think they are doing their job quite successfully. We have learned so much from them and those who misplaced them that we cannot thank them enough.

In this article will criticize the technologies and users used in the wrong place and way today without hesitation because we pay the bills for those mistakes.

For instance, we are sick enough of people saying we need 5G connection speed and new CPU/GPUs for widespread immersive, interactive and 3D “XR, VR, AR, Metaverse” technologies.

Probably, they never built anything from scratch to the top; they are just storytellers, or they always keep trying to create something new by using some other’s technologies in the wrong place or architecture.

We do not understand what kind of result or success you expect from the scenes you create on a game engine not designed and optimized for XR, VR, or AR. Let’s burst this silly bubble together.

Even if 5G has arrived or new generation processors have been created, a well-designed and developed data processing and streaming infrastructure will always be ahead of you.

While you send a 3D scene or a 3D model to the consumer, someone can send over ten 3D scenes simultaneously. While you can design a complex interface or nice set, if you don’t know how to stream or render that 3D scene within minimal resource consumption, you need to say we need 5G speeds and next-gen CPU/GPUs. Because you have no other options. But no, guys, We don’t need them.

Do we really need 5G and next-gen CPUs – GPUs? Or are all these some empty complaints and excuses?

AR/VR technologies are relatively more widely used in more developed parts of the world. There are no barriers to the multi-user interactive VR/AR experience at current connection speeds. For example, do you experience delays while making a video call meeting? No! (Please ignore extreme line breaks, they can also be in 5G/7G.)

Probably, the most significant innovation expected to come with 5G is latency results below 1 ms and a very high bandwidth range. Is this a miracle or not? Let’s examine 5G over the Da Vinci Surgical System.

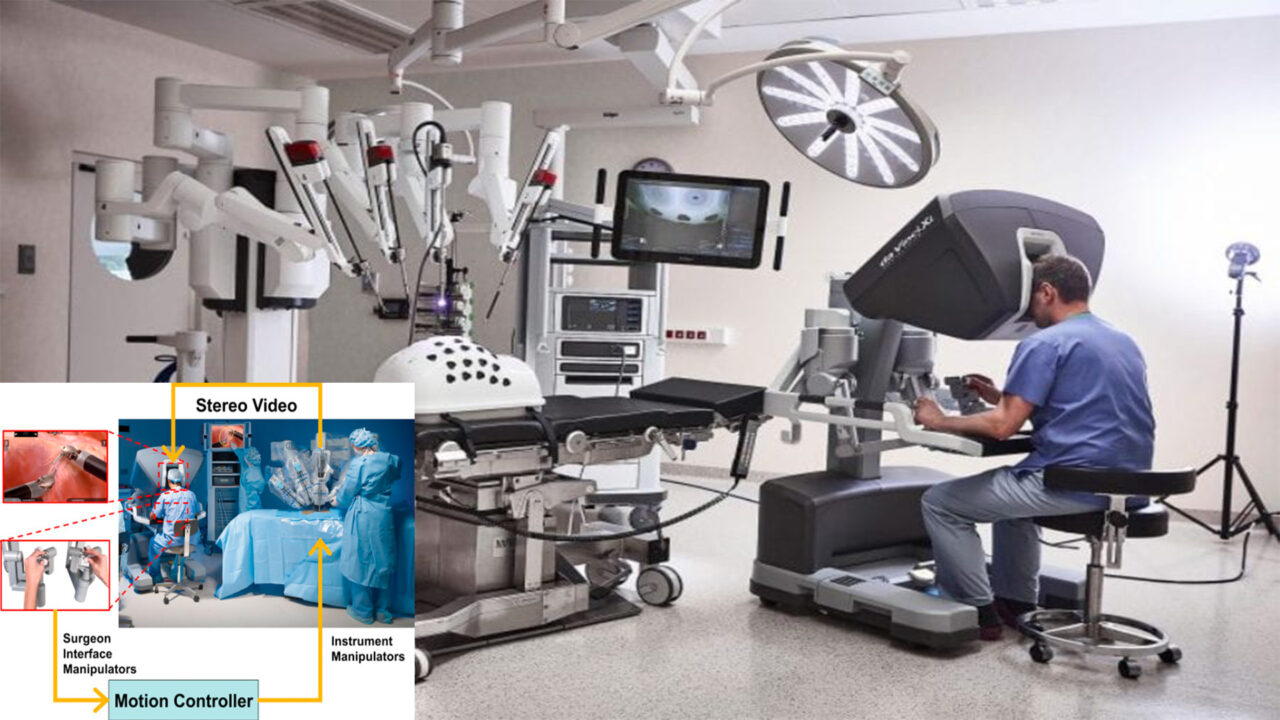

Da Vinci Surgical System

Yes, 5G is a miracle for the Da Vinci Surgical System. With this system, a surgeon in the USA can immediately operate on a patient in China. However, current 4G/4.5G technologies cannot. Why?

The miracle of 5G comes from its bandwidth range. In other words, you can instantly send dense and extensive data from place to place with very low latency. We would love to explain this with electromagnetic wave theorems like a physicist. Let’s not get confused, shall we?

In this case, we can simplify 5G as follows. Suppose we have a single-channel cable. Suppose the internal structure of this cable is in the form of a thin tube. We can send 5, maybe 50, 8K movies simultaneously through this tube to a friend far away without delay. The current 4G/4.5G technology cannot support such intensive data transfers. However, 5G doesn’t seem to be coming soon, either.

In the Da Vinci Surgical System, a doctor in the United States should be able to see the area he is operating in with the shortest possible delay and the highest clarity quality while operating or treating a patient in China. Otherwise, the operation may fail, and the patient may be lost.

In this sample, the Da Vinci Surgical System should be able to send a 4K or 8K quality image with almost zero delays from China to the doctor in America. When the doctor in America gives the command to intervene in the operation area, this command should be sent to China with almost zero delays. It is a miracle that this system works seamlessly in both directions. From this point of view, the 5G can create miracles.

Metaverse

Let’s take the example of the Metaverse. That Metaverse should be photorealistic, immersive and flawless as much as “Ready Player One”, not low quality extreme low poly like today. In addition, millions of people can be active simultaneously in this Metaverse. Can you imagine the data size and data traffic that will occur in that kind of Metaverse?

In such extreme cases, yes, we need 5G. However, non-optimized platforms will never work as expected on 5G. Precisely verified information! (tip)

- So, do we really need 5G in the flow of everyday life or in streaming a 3D “XR, VR, AR” scene?

- Do we need a more advanced processor family that has not yet been invented?

Guys, let’s say in advance, no!

The Mistake

For instance, a very famous AR glasses manufacturer exists in the AR tech world. Probably, they were founded over ten years ago. Their market valuations rose to $6 billion. Then it quickly dropped to $400 million. With the support of some Middle East VCs, they have also increased their market valuation to several billion dollars. So far, everything is perfect. They’ve created an investment-raising success story, albeit with ups and downs. No one can object. However, they failed to turn a success story in fundraising into a technology success story. Why?

Initially, they focused heavily on juicy features such as animation and interactivity. They may have chosen this method because of market creation and consumer demands. However, when this article was written, they still couldn’t sell a working pair of glasses.

If you do not calculate or can not compute the processor, memory, storage and network load that will be created by immersive 3D scenes such as animative AR or interactive XR that you have created with the old methods or technologies you use. In that case, you cannot stream or run them on any device. You only share projection videos created with After Effects. Currently, many companies worldwide accomplish only video sharing, saying, “Looks, guys, what we will invent in some future.” Funny?

The game-changer point here is that almost all of the design and development tools you use were not created for the purposes you want to use. So you can’t expect a game engine to produce any immersive 3D scene optimized for web or mobile browsers. Those game engines should not say that we are doing this, but they are saying that we are/can do it. Sounds unbelievable?

The Past

From our point of view, many critical mistakes in the immersive world are caused by looking for the solution in the wrong place. 3D designing, rendering tools and game engines, widely used today, emerged in the 2000s. Is this a problem? It’s not! Let’s take a look.

- The problem is that those tools were not created to support cloud-native systems or massive data transfers.

- The problem is that those tools have not upgraded their core infrastructure and engines compared to today but have only been content with certain level updates. We are not saying it is a mistake; we are saying they are not fully prepared for a new and different world.

- The problem is that most of the newly developed tools could not have been able to reveal anything new. It’s just that they slightly improved the existing ones.

The problem is that our perspective on data and how we hold data differed due to technical and hardware constraints in the 2000s. In fact, it would be more accurate to say that in the 2000s, we had reservations about writing the data, that is, saving it. At those times, we kept no data that we thought would not be used because the hardware was insufficient and expensive. There were even days when we recorded the necessary ones by shortening them. It’s pretty funny when you think about those days.

Nowadays, we record almost everything, even beating those who do not. Today, not saving or deleting data has become more expensive than keeping that data. While this is the situation that technology has come to in 20 years, it is evident that nothing new can be created with applications and frameworks written from the perspective of the 2000s and whose core parts still bear the traces of that period.

Creating something new

As a result of wrong approaches and mistakes, you cannot say that we need 5G or next-generation CPUs/GPUs for immersive, interactive and 3D “AR, XR, VR, Metaverse” technologies to work flawlessly. Let’s prove it!

Instead of designing products compatible with existing, widespread and accepted technologies, you try to develop products with compatibility problems. The result will undoubtedly be a disappointment. The tragicomic part is that you are trying to create these products with widely used old technologies without creating new technology. In other words, you are trying to create extraordinary but incompatible products with standard and frequently used technologies. Don’t!

Massive data processing, data streaming and 3D model, “AR, XR, VR, Metaverse” technology platform infrastructures are very different disciplines. Study your lesson!

The Future

Let’s discuss the video below and the AR, XR, VR and Metaverse design and development methods common globally.

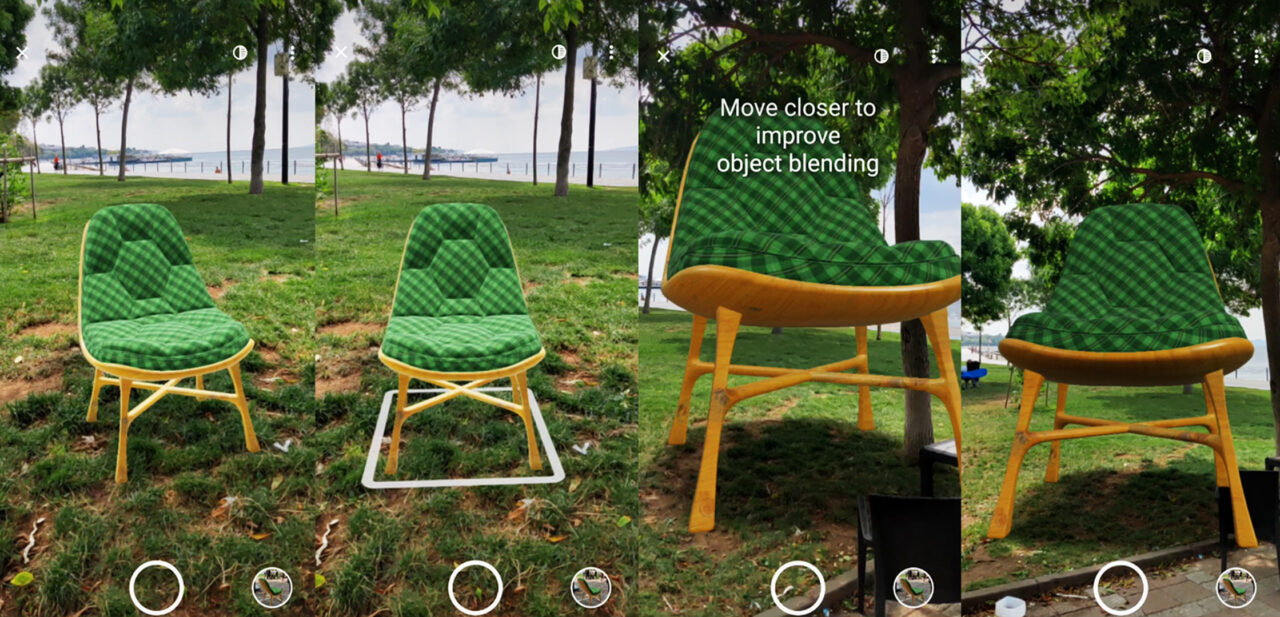

This 3D “AR/XR” chair scene with a 150K polygon 3D model + 2K texture + 4K HDRI Skybox was designed in Arsuite Designer Studio. The 3D scene has a scene size of 10–12 MB. You can examine the chair in XR and AR. We will only talk about AR.

When examining the chair, please pay attention to the download and opening speed. We downloaded and viewed this scene in 300–350 ms on any mobile device with a 100 Mbit connection speed. (download + render) 85–90% of this time is spent on network latency and 10–15% on rendering 3D models and materials in the scene. (Due to Apple’s whims, render time remains unchanged for iOS users, while download time can be up to double.)

According to these benchmark results, we can say that the downloading and opening time of a 3D scene created with Arsuite Engine takes less time than downloading and opening a single 2D jpeg/png file of the same size.

- Please ignore the cameraman’s hand shaking while placing AR. 🙂

- Virtual skybox is not used in the AR scene. The reflections and shadows in the AR scene are completely taken from the real sky and environmental components.

The chair is placed on the lawn by a beach. You can see the chair’s shadow falling on the grass when you look carefully under the chair. You can see the shadows in the channels on the sponge when you look over the chair. You can see that the shades also change or grows when you rotate or resize the chair.

The light and environmental effects on which the camera is affected also change as you get close and move away from the chair. As you can see in the video, these light changes are instantly reflected on the chair. Shadow and texture colour range immediately respond to this change.

The chair’s size, determined as 85 cm in the design, was increased to 4 meters on the AR stage. Despite such extreme changes in the chair size, the chair’s form or image quality has not deteriorated.

When we enlarge the size of the chair by five times and then approach the chair to focus on the tree pattern on one leg of the chair and the hollows on the pattern, the quality level of the texture on the chair can be seen very clearly. Even though the chair was magnified five times with AR, the texture was intact. Plus, as you can see, we compared the leg of the chair with a real tree. 🙂

The chair was reduced to its actual size, i.e. 85 cm, and the chair textures were examined again by approaching it. Focusing on the tree pattern on the leg of the chair and the cavities on the pattern in this review, again, excellent image quality and clarity were obtained. In other words, when the texture is in its original size, the visuality is beyond perfect again in the zoom made by approaching a chair’s point.

Ok, someone kept saying we needed 5G for photorealistic, high visual quality 3D “AR, XR, VR, Metaverse” scenes? Where are they now? We accomplished that by creating a scene in flawless photorealism with only (150K polygon 3D model + 2K texture + 4K HDRI Skybox). Now, imagine what we can do with over (5 million polygons 3D model + 4K texture + 4K HDRI Skybox)? Yes, we can achieve more beyond this just in a few seconds.

So what should we do to create a quality AR and XR scene and share it with the world? What should we actually not do?

The Big Mistake

Application Driven 3D “AR, XR, VR, Metaverse” Design & Development

AR, XR, VR, Metaverse design and development tools and APIs, which are widely used globally, generally appear as plugins or extensions that work with some other applications. For example, AR, XR, VR, and Metaverse applications are created by adding a plugin into a game engine IDE or another design tool. Although few applications work independently, they are unsuitable for widespread use.

3D “AR, XR, VR, and Metaverse” applications designed and developed with a plugin working on another platform mainly carry unnecessary code fragments and weaknesses of that development platform. You can not avoid this one.

For example, if we created an XR and AR scene like the chair above through such a plugin, We had to design and develop separately for XR and AR and export them. (Export is the crucial keyword.)

The chair has WebXR and WebAR features simultaneously. In this case, we needed to design and produce an application for the XR scene. We also needed to design and build a second application for the AR scene. In other words, we would create and develop for required AR and XR scenes at least twice and have at least two different applications.

- You must design and develop AR, XR, VR, and Metaverse scenes on all those platforms separately, even when you use the same 3D model.

- You must develop and export separate applications for each AR, XR, VR, and Metaverse scene on all those platforms. No matter how many different scene types, you must develop or export additional applications for all of them.

- All those platforms you use for design and development will add unnecessary pieces of code into your applications as their reflex. Unnecessary code means unnecessary performance loss and unnecessary file load.

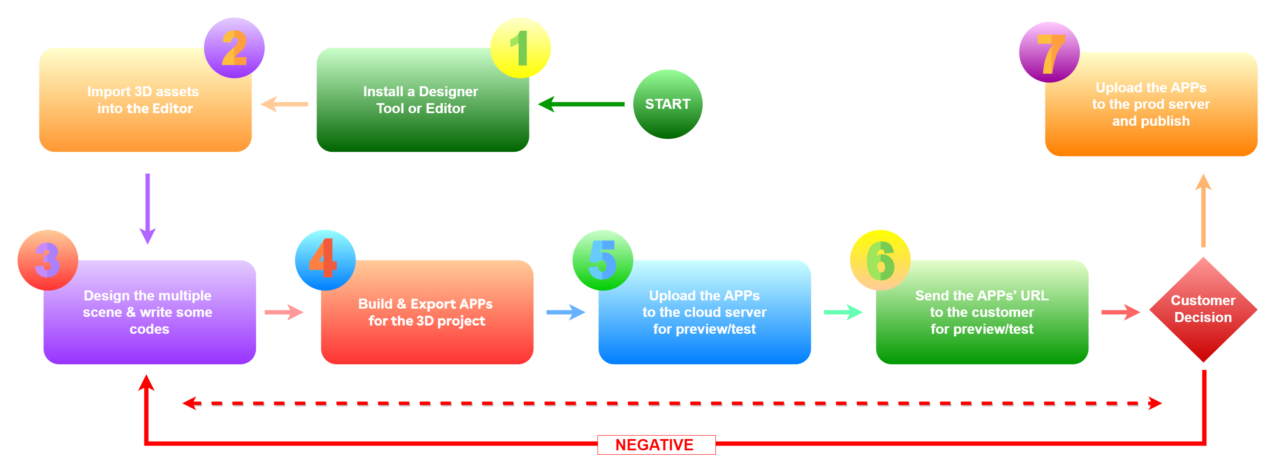

- When you want to make changes to your AR, XR, VR, and Metaverse scenes, you must redo everything and export all scenes as an application again. Please check their design & development flow on the image below.

- All those platforms were not made for AR, XR, VR or Metaverse design and development. So they don’t have any performance optimization for AR, XR, VR or Metaverse design or development.

- All those platforms are not optimized for cloud-native operations. Therefore, they do not know how to share the created AR, XR, VR or Metaverse scenes with the whole world in the fastest and safest way.

- All those platforms are not optimized to run on the web or mobile browsers. In other words, AR, XR, VR or Metaverse scenes produced on these platforms are unsuitable for worldwide web use.

- All on those platforms, those who will design and develop some AR, XR, VR or Metaverse scenes must have advanced design and coding knowledge.

- Those who will design and develop something on those platforms need a remarkably advanced backend infrastructure such as 3D Stream API, CDN, CMS, etc., to share the AR, XR, VR and Metaverse scenes on the web.

Application Driven 3D “AR, XR, VR, Metaverse” Design & Development Flow

For each AR, XR, and VR scene, we have to design, develop, and export each scene app separately, upload it to the cloud server, test (dev test) and send it to the client for testing (UAT).

If we made a wrong design or the customer did not like our design, we have to redesign everything, develop, export, upload to the cloud server, test and send it back to the customer for testing.

- We did not mention enough significant additional developments such as 3D Stream API, Web-Server, CDN management, CMS development and Cyber Security for AR, XR, and VR scenes to work and broadcast properly.

Inevitably we have to run this stream again and again if necessary for all new AR, XR, and VR scenes requested from us. Designers, developers, users or customers who had to operate this flow several times give up on AR, XR, VR, and Metaverse technologies after a while. The 5G is their very last and smallest problem.

Who and why put up with such a complex, lengthy and complicated design development process? We believe you have too much work to do before whining about 5G.

Application Driven 3D “AR, XR, VR, Metaverse” Design & Development Result

Let’s move forward based on the chair above. We must create three different applications with a 150K polygon 3D model + 2K texture + 4K HDRI. That will significantly complicate the design, development and publishing processes and dramatically increase the amount of data stored and published on the cloud server. For various reasons, such as lack of optimization and adding unnecessary code to applications, these applications will be several times larger than they should be. 5G and next-generation processors, please don’t be too late! We need you more than any time! 🙂

If you have come this far in the article, we are sure you are now a solid 5G follower.

Now, you see, running AR, XR, VR, and Metaverse scenes can have relatively large dimensions in such a misconfigured model without 5G and new generation processors is impossible.

Maybe you should definitely not use the design and development methods described so far!

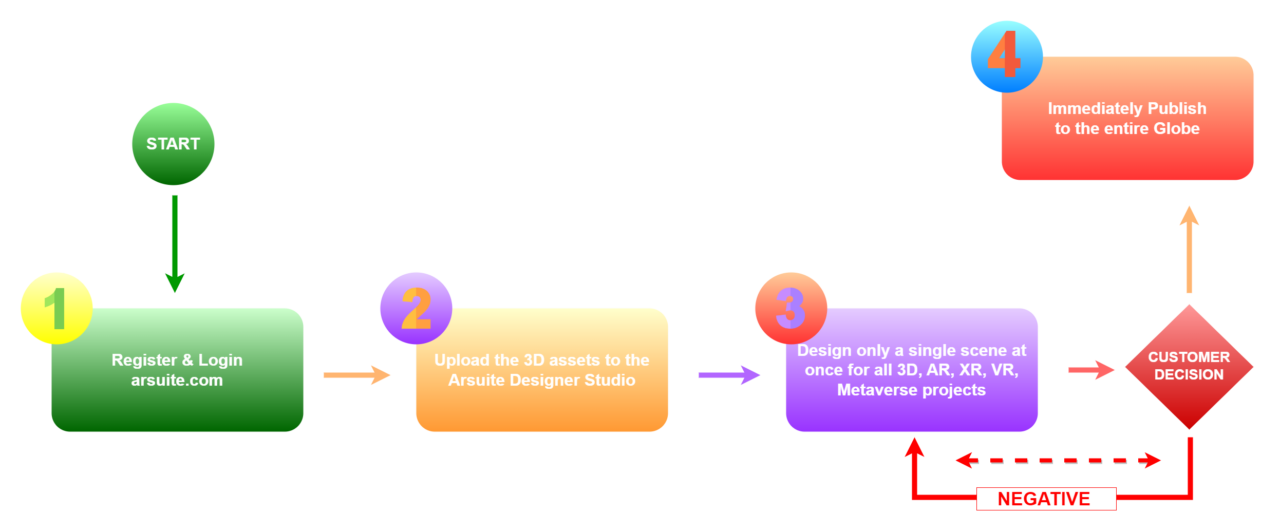

Data-Driven 3D “AR, XR, VR, Metaverse” Design & Development

As can be understood from what we have discussed so far, we need a brand new 3D “AR, XR, VR, Metaverse,” development model. It is essential that this new model adopts modern data processing methods and supports cloud-native systems. If the platform to support these methods is possible;

- It should be completely Cloud-Native.

- Must have an utterly Cloud-Native design studio.

- It should fully support No-Code development.

- It should have a new data architecture for 3D models.

- It should have a new data architecture for 3D scenes.

- It should have a new data architecture for 3D scene materials.

- Dynamic must have an infrastructure that supports “dynamic asset loading” mechanisms.

- It should have an infrastructure that can change any asset in the 3D scene remotely, instantly and in real-time.

- It should have additional and compelling optimizations for mobile devices.

- It should have an oversimplified design and publishing flow.

- Extreme High-Poly 3D models and high-quality textures should have a CMS and CDN infrastructure that is highly optimized for materials.

- It should have a 3D engine and CMS infrastructure suitable for reuse. For example, thousands of different 3D scenes can be created using just one 3D model over and over. In other words, only one 3D model should be reusable in thousands of 3D AR, XR, VR, and Metaverse.

- 3D models and high-quality textures should have an advanced data-stream infrastructure that is highly optimized for materials.

- It should make all these items’ advanced features available, making them simple for anyone to use.

We can extend this list further with more advanced features in Arsuite. Let’s suffice with that for now. If you want, you can add your own suggestions to the comment. At least as Arsuite, we will definitely consider them.

- If you have a platform with the features in this list, you definitely do not need 5G or new-generation CPU/GPU processors.

- If you have such a platform, even if there are 5G or new generation processors, you will still be one million light years ahead of all others.

Creating such a platform and building is much better than waiting for others to develop new technologies. For example, when you consider the GSM base stations that need to be installed for 5G, it cannot be predicted how long you will have to wait. Of course, waiting is also a choice; some may wait for new technologies to become widespread for 3D “AR, XR, VR, Metaverse”. But when that day comes, we are sure they will say that we must wait for 6G.

When we want to develop such a platform, you will need to find answers to the following questions.

- Can such a platform be developed?

- Who can develop such a platform, and how and for how long?

- Yes, it can be built. ( It has been developed. Arsuite Immersive Intelligence )

- Suppose you have a knowledgeable and experienced team in 3D, 3D Engine, Game Engine, Cloud-Native Platform, and Cloud Computing. In that case, you can develop such a platform within the suitable development pipeline for 3 to 5 years, depending on the number of teams.

A spoiler from Arsuite Immersive Intelligence

Many people believe there is a need for technologies that will be put into service in the future for the widespread use of advanced technologies such as 3D “AR, XR, VR, Metaverse” that will carry big data.

Even if we have higher connection speeds or new generations of more advanced processors in the future, with this increase in the amount of data circulating on the internet, we will always need software, platforms and devices that are optimized at the maximum level. Especially considering that the data circulating on the internet in the future will be denser and larger with 3D “AR, XR, VR, Metaverse” features, it may not be able to handle the data load that will emerge even if 7G instead of 5G.” Law of action and reaction. Sir Isaac Newton.”

As we all know, the amount of data circulating and uploaded on the internet is increasing exponentially daily. In other words, people are uploading more and more data to the internet every day. By clicking on the links below, you can review some of the global research on the amount of data uploaded to the internet. Imagine the problems of systems that are not optimized according to these studies!

How Much Data Is Created Every Day? [27 Staggering Stats]

How Much Data is Created on the Internet Each Day?